The most recent attempt to address the lack of openness in the development of generative AI is a new Senate measure that would make it simpler for human creators to determine whether their work was used to train artificial intelligence without their consent.

Copyright holders who may demonstrate a good faith conviction that their work was utilized to train the model will be able to subpoena training records of generative AI models under the Transparency and Responsibility for Artificial Intelligence Networks (TRAIN) Act.

To determine with certainty whether the copyright holder’s works were exploited, the developers would only need to disclose the training materials. If you don’t comply, it will be assumed by law that the AI developer used the copyrighted material unless you prove otherwise.

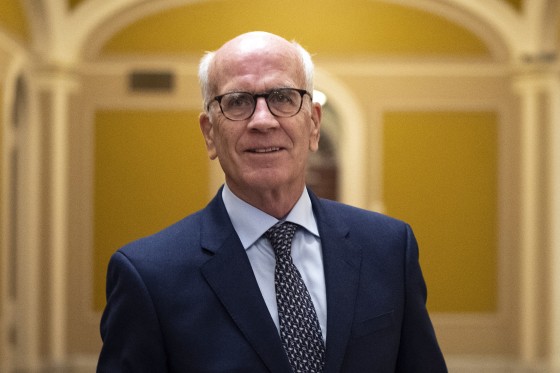

As AI grows to permeate American society, the nation must establish a higher bar for transparency, according to Sen. Peter Welch, D-Vt., who presented the bill Thursday.

This is straightforward: Welch stated in a statement that if your work is used to train artificial intelligence (AI), you, the copyright holder, should be able to identify if it was used by a training model and should be paid if it was. We must provide American musicians, artists, and creators with a way to identify instances in which artificial intelligence corporations are exploiting their creations to train models without the consent of the artists.

Artists worry that the proliferation of easily accessible generative AI technology may allow people to replicate their work without permission, acknowledgment, or payment, raising a number of ethical and legal concerns.

The aviral Midjourney spreadsheet, which named thousands of persons whose work was used to train its well-known AI art generator, raised worries among artists earlier this year, despite the fact that many big AI companies do not openly disclose their models’ training data.

Businesses that depend on human creativity have also attempted to hire AI developers.

News organizations including The New York Times and The Wall Street Journal have filed copyright infringement lawsuits against AI firms like OpenAI and Perplexity AI in recent years. Additionally, the largest record labels in the world joined forces in June to sue two well-known AI music production companies, claiming that they had improperly trained their models on decades’ worth of protected sound recordings.

More than 36,000 creative professionals, including Radiohead’s Thom Yorke, author James Patterson, and Oscar-winning actor Julianne Moore, have signed an open letter calling for the ban on using human art to train AI without consent as legal tensions increase.

Although various states have tried to enact specific AI-related restrictions, particularly with deepfakes, there is currently no overarching federal legislation to control the development of AI. In order to prevent actresses and other performers from having their digital likenesses used without permission, California voted two legislation into law in September.

Similar bills have been introduced in Congress, such as the AI CONSENT Act, which would require online platforms to obtain informed consent before using consumers’ personal data to train AI, and the bipartisan NO FAKES Act, which would protect human likenesses from nonconsensual digital replications. So yet, neither has received a vote.

Welch stated in a press release that the American Federation of Musicians, the Recording Academy, the Screen Actors Guild-American Federation of Television and Radio Artists (SAG-AFTRA), and major record labels Universal Music Group, Warner Music Group, and Sony Music Group have all endorsed the TRAIN Act.

However, with just a few weeks left in this Congress, members are concentrating on things that must be passed, such as preventing a government shutdown on December 20. Since any legislation that is not enacted must be reintroduced in the new Congress when it meets in early January, Welch’s office stated that he intends to revive the bill next year.

Note: Every piece of content is rigorously reviewed by our team of experienced writers and editors to ensure its accuracy. Our writers use credible sources and adhere to strict fact-checking protocols to verify all claims and data before publication. If an error is identified, we promptly correct it and strive for transparency in all updates, feel free to reach out to us via email. We appreciate your trust and support!